TreeSeg: Hierarchical Topic Segmentation at Augmend

From large transcripts to titled chapters

Why chapters?

At Augmend we are building a platform that captures tribal knowledge for dev teams and makes it easy to learn from/automate away. Our platform lets you record your own session showing how to complete a task or sharing some information, or upload any video you want that has data that you want to extract. The system transforms all available raw session data streams (voice ASR + video/screenshares) into embeddings and structured data, uses it to extract knowledge from your sessions and then serves it back to you in various forms, including:

- Session analysis

- Automatically generated documentation

- Retrieval augmented generation (RAG)-based Q & A

During the analysis of a session, our system infers certain attributes that characterize it, such as a title and synopsis, keywords for easier retrieval, interesting questions that can be answered using the session content etc.

Perhaps the most central aspect of this process is the semantic segmentation of the session into chapters. A good set of chapters is useful because it organizes a session into parts and adds context to each part. It also provides a framework for systematically breaking down large inputs to fit the size constraints of downstream models (more on that in a future post :D).

In this blog post we will take a closer look at how, here at Augmend, we segment your sessions into chapters using our in-house developed chapter segmentation algorithm: TreeSeg

Before we get started:

- Paper: You can find our published preprint on TreeSeg here: https://arxiv.org/abs/2407.12028

- TreeSeg is open! Following the open source spirit, we decided to make TreeSeg public and available. The official implementation of the algorithm can be found here: https://github.com/AugmendTech/treeseg

A timeline of events

Before we get into algorithmic details about segmentations it is important to first define what it is that we are segmenting. Our approach is to construct a timeline of events that drive the narrative of what happened during the session.

The main modality that drives this narrative is voice. Audio is transcribed via automatic speech recognition (ASR) into a collection of utterances. For recorded sessions where one or more screens were shared via our client application, we also have access to a set of semantic actions:

- Sharing, focusing on and closing a shared window.

- Navigating to a URL.

- Executing a shell command.

We refer to utterances and actions as “events”. We order all events chronologically to compose the script of the narrative.

Similarities and topic shifts

An important consideration has to do with the continuum of availability of labeled data. Initially we have few, if any, data points from a new user. Ideally we would like our algorithm to start in unsupervised mode, borrowing strength from general-purpose embedding models. As we gather more and more labeled data from a user, we want to transition to a semi-supervised and then to a supervised mode.

Our approach follows the TextTiling framework introduced by Hearst (1997). The idea is to compute a sequence of similarities between consecutive chunks of text. Then, points of unusually low similarity should mark a transition between one topic to another.

Operating directly on raw text can be unwieldy ; we’d rather operate on numerical vectors. The BERT-based approach of Solbiati et al. (2021) (henceforth referred to as BertSeg) is geared towards segmenting meeting transcripts and can be regarded as a modern version of TextTiling. Each entry in the input transcript is represented by an embedding vector obtained via the RoBERTa (Liu et al. 2019) model.

Computing similarity between consecutive embedding vectors is straightforward (cosine similarity). One must be careful not to compare individual embeddings and for good reasons. Utterances by themselves can be severely lacking in context. As an example, take the following utterance:

“Sounds good!”

On its own it can hardly be of any use in identifying topic shifts. Suppose now that the utterance preceding it was either option A or option B:

- “Let’s move on to the next item in the agenda.”

- “I’d like to stay on this point for a bit.”

Clearly in the case of A this might be a good candidate point for a topic shift, whereas in the case of B such an event seems less likely.

The authors of BertSeg choose to group utterances into blocks of a predefined width and aggregate them by max-pooling along the temporal dimension. Instead, we compose a mini-conversation using the current utterance and up to W utterances in its immediate past. We then embed the whole mini-conversation together, essentially letting the embedding model do the aggregation instead of using a hard-coded operator (max-pooling). In practice, OpenAI's v2 (ADA) or v3 embedding models have turned out to be quite effective at this task.

TreeSeg

BertSeg proceeds in a manner similar to TextTiling by identifying local minima of similarity below a certain threshold (i.e. 1-2 standard deviations). These time points mark transitions between distinct topics. BertSeg is essentially answering the question “What are some points in time when the topic likely shifted?”

Instead, we ask the following question: “Suppose that there is a topic shift inside this segment. When did it happen?”

The questions are similar but the philosophy behind them is different. Behind the latter question there is the assumption that a topic shift is always there but after some point it is not significant enough to warrant further attention.

“But what makes a topic shift significant?” is a good follow-up question. Our intuition says that the answer is highly subjective. Two different users could arrive at segmentations of different granularities for the same session.

TreeSeg is motivated by an idealized UI that provides such an affordance to the user ; the ability to transition from coarse to fine chapter segmentations seamlessly and to choose the desired level of granularity.

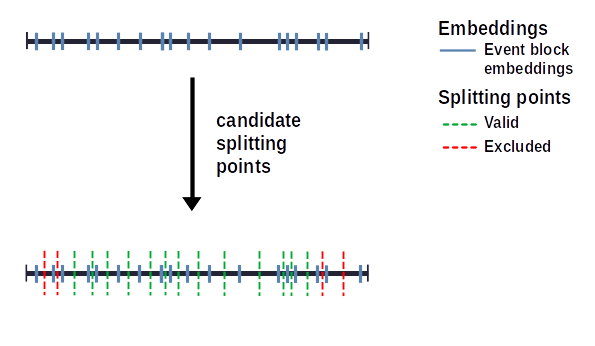

Let’s start with the full timeline of a session and assume that, somewhere in there, a topic shift is happening and our goal is to find when. To do so, we can consider all points where we could partition the timeline into two segments. Some of these points might not be valid for various reasons. One such reason is that the split is too close to the endpoints and would result in one large and one degenerate segment. These points are excluded from the set of candidates. The valid candidate set looks like this:

For scoring candidates, we use a temporal clustering loss. The intuition behind it is that a good candidate will split the timeline into two sets of temporally contiguous embeddings that are semantically similar and thus tightly clustered around their means.

The loss/potential of the timeline is defined as:

Where denotes embedding vectors and their mean. Splitting the timeline at candidate point results in two segments with total potential given by:

Where and are the means of the left and right resulting segments. The score of a candidate is then defined as the decrease in potential between the initial segment and the two resulting sub-segments.

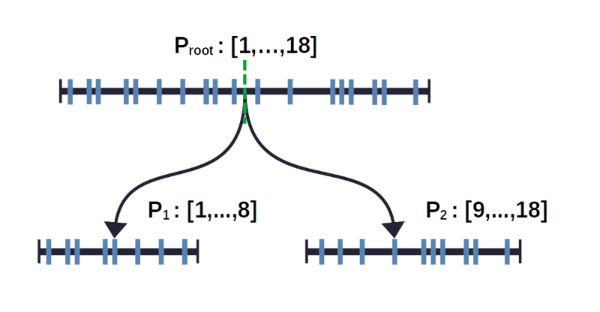

All we have to do now is compute for every and identify the with the highest score (largest decrease in variance). This optimally splits the initial segment in two. Here is an example partition of a timeline of 18 events into two smaller segments:

This mechanism can be applied recursively to the resulting segments and is the essence of TreeSeg. It can also be seen as a form of divisive clustering.

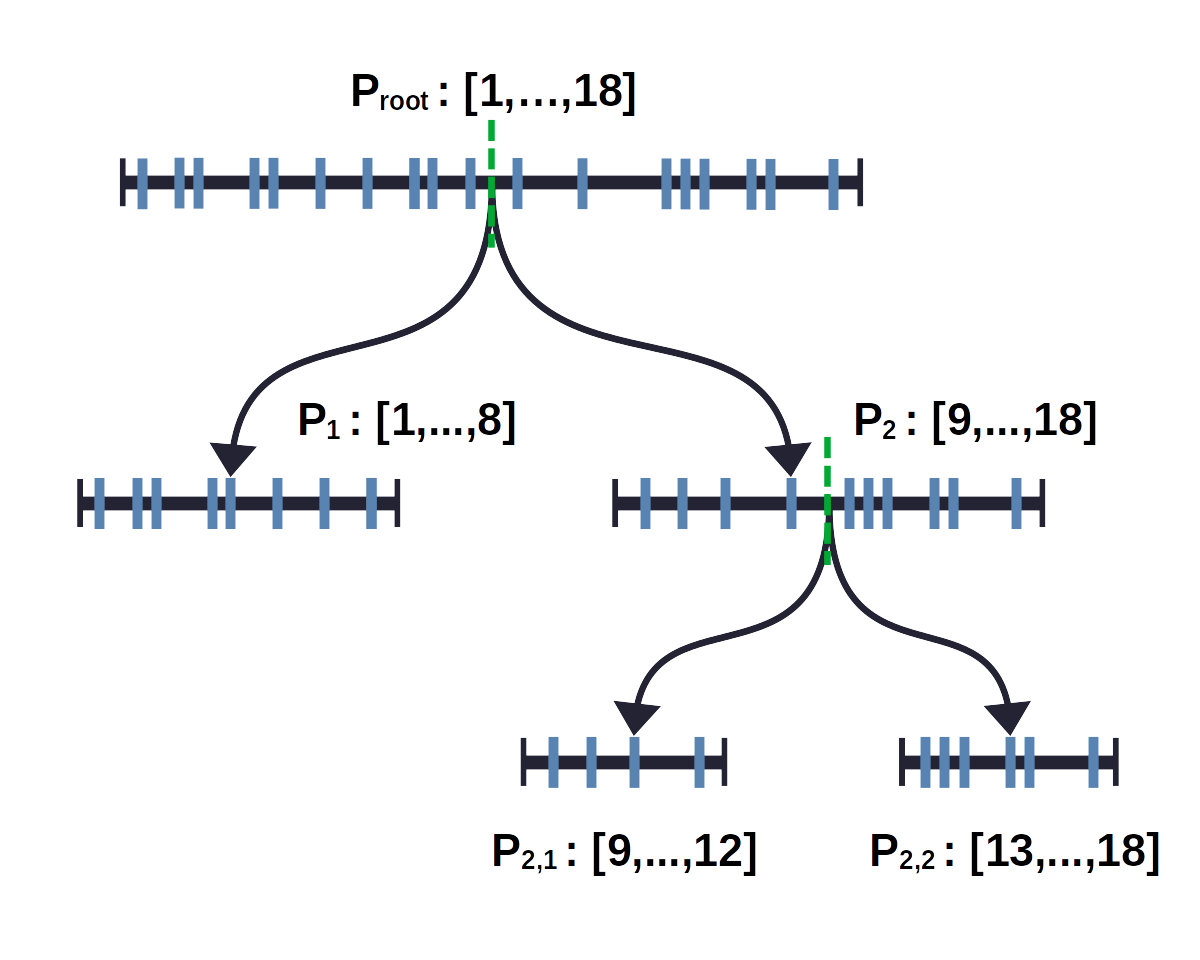

Here, one of the two resulting sub-segments is partitioned again by TreeSeg:

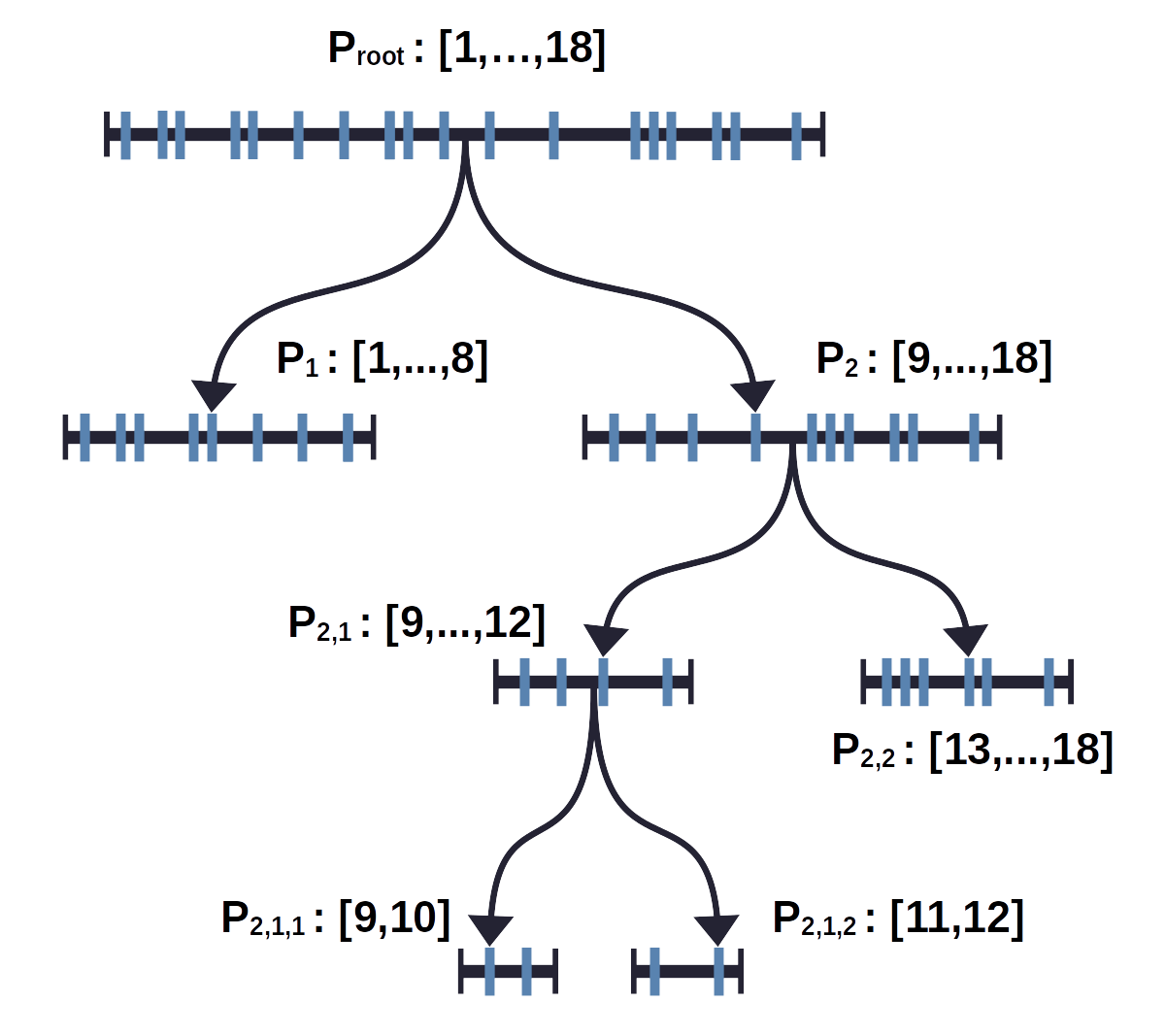

By repeating this process we can obtain a binary tree of segments, such as this one:

A temporal partition of the timeline is a collection of sets such that:

- Each set contains a subset of temporally contiguous events.

- The sets are mutually exclusive.

- The sets cover the entire timeline.

Any tree derived by applying the splitting mechanism recursively has the following property:

Boundary partition property:

Any boundary obtained by starting with the root and replacing a node with its two children is a temporal

partition of the timeline.

In other words any such boundary is a valid segmentation of the session into chapters. This provides a clean abstraction to the idealized UI that we described above. All we need to do is provide a suggested boundary as the starting point and let the user arrive at their desired segmentation by traveling up and down this tree by either replacing a segment with its children (splitting) or two children with their parent (merging). Note that we do not currently support this UI ; it only serves as a source of inspiration and as a north star for the future.

There are many details that I glossed over while transitioning from a single split to a deeper tree. Perhaps the two most important ones are:

- How do we choose candidates efficiently?

- How do we know when to stop?

On efficiency: One obvious optimization is that for each segment in the current boundary, the optimal splitting point can be computed exactly once. Being independent of the other segments and the state of the boundary, the optimal splitting point will remain the same up to the point when the segment is selected for partitioning. An efficient implementation of TreeSeg maintains a max-heap of (score, segment) pairs. Here is a high-level outline of one iteration of TreeSeg:

- Select the segment with the top score from the heap.

- Split it into two sub-segments at its precomputed optimal splitting point.

- Compute the optimal splitting points (and their scores) for the two sub-segments.

- Insert them back into the heap.

Another optimization has to do with computing the optimal splitting point itself. It can be shown that the temporal clustering loss can be computed for all candidates in linear time, by maintaining the cumulative sum and cumulative sum-of-squares of the embedding vectors.

Knowing when to stop is a form of art: TreeSeg stops splitting segments when a stopping criterion is met. One idea is to only consider splits with a difference in potential above some threshold or to stop splitting when the total potential cannot be reduced by more than a predefined percentage. Setting these thresholds turns out to be challenging and unwieldy. We take a more practical approach based on segment sizes. We define M to be the minimum viable size for a segment. TreeSeg will stop splitting a segment when doing so will produce non-viable sub-segments. This happens when the current segment size is <2M.

We compared TreeSeg with BertSeg and a few other approaches on the hierarchical segmentation task. Only TreeSeg is intrinsically hierarchical. Fortunately, baselines that follow the TextTiling approach can be extended to the hierarchical setting in a straightforward manner. TreeSeg outperforms the competition by a wide margin. Our intuition says that segmentation is not just a local task and as such, global information is important in identifying topic shifts. TreeSeg utilizes global information by splitting the timeline in a top-down manner.

For the comparisons we used the AMI and ICSI corpora for which we had to write our own parsers. We also collected a small-scale dataset of our own called TinyRec. TinyRec is a dataset consisting of transcripts obtained from 21 self-recorded sessions. Each transcript contains spoken utterances as transcribed via ASR and was manually annotated with two-level topic annotations.

Readers that are interested in more implementation or experimental details can refer to our publication: https://arxiv.org/abs/2407.12028

The official TreeSeg repo (https://github.com/AugmendTech/treeseg) makes multiple contributions:

- Code for neatly parsing AMI and ICSI.

- TinyRec itself.

- Adaptations of baselines to the hierarchical setting.

- An efficient implementation of TreeSeg.

You can use it, adapt it, play and build interesting things with it. If you do so, drop us a line at dimi@augmend.com. We would love to hear more.

Naturally, there are many details that I glossed over, such as how to get from segments to titled chapters. There are also many ideas and interesting directions for further exploration. In a future post I will describe how we use the resulting segment tree to partition large transcripts for downstream tasks, providing a scaffold to move between local and global context.

In the meantime here is an example segmentation produced by TreeSeg:

Segmentation is only one of many session intelligence features supported by our platform. You can explore Augmend’s full capabilities here: https://augmend.com/

We also recently launched AugAPI. You can find more details here: https://augmend.com/blog/augmend-platform